The Data Lab Trap

Have you ever wondered why so many Internet companies use algorithms across their core business processes to drive automation and constantly optimise their business while your algorithms never really left the lab? You are not alone. Many large organisations invested heavily in data science and big data over the past few years and often struggle to scale their successful machine learning projects beyond a small pilot scope. Something went wrong when we tried to copy the digital players. Algorithms stay in the lab and are not put into the heart of the enterprise. This article sheds some light on how traditional big corporates can take a leap forward towards the digital players and is a built up to my previous article „10 Rules for Data Transformation in Inherently Traditional Industries”.

Dr. Alexander Borek, Global Head of Data & Analytics, Volkswagen Financial Services; Alexander.borek@vwfs.io

Dr. Alexander Borek, Global Head of Data & Analytics, Volkswagen Financial Services; Alexander.borek@vwfs.io

Alexander will be speaking at the Enterprise Data & Business Intelligence and Analytics Conference Europe 19-22 November 2018 in London on the subject, Data Science for Grown Ups: How to Get Machine Learning out of the Lab to Scale it Across the Enterprise

How we all got there

A few years ago, the business world realised that Machine Learning, Big Data and AI can generate new value out of large amounts of data generated through digitalisation of the business. New tools, new types of databases and and the rise of cloud computing allowed very flexibly to combine and process high volumes and diverse formats of data bringing new flexibility in working with large amounts and varieties of data. New ways of working between business and IT aimed at bringing rapid business value were introduced in the Tech startup world and copied to more established businesses bringing new agility. Machine learning and AI methods entered business life with the effect that processes can be increasingly automated.

We hired plenty of data scientists and let them do magic. Somehow inside the lab use cases worked, in very fast time we could solve complex business problems, but while transporting them to the real world they suddenly broke down and collapsed. Outside the lab we often find a hostile environment which create a number of challenges and threats for our precious little algorithms:

• Different toolsets and architectures

• Cloud cannot be used

• Legacy IT Systems

• Slow and complex purchasing and approval processes

• Risk, security, data protection, regulations and compliance issues

• Deployment procedures unfit

• Traditional IT operations unfit

• Data quality issues

• Inconsistent data models

• Strong cultural resistance

• Data Scientist are unexperienced and don’t know „how the company is running“

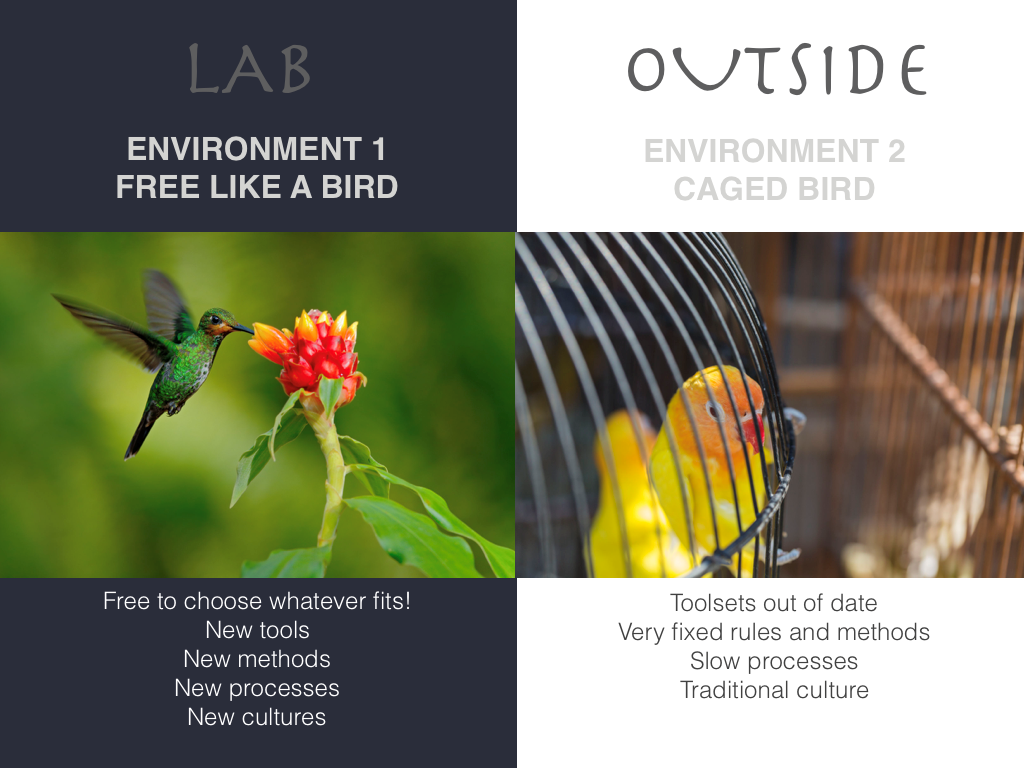

This is because we never fixed these problems, we just tried to create a new free space where innovation can grow and foster within a safe environment, the Innovation Lab, where everything was sort of allowed. What works inside the capsule of a innovation lab does not necessarily work in outer space, i.e. the rest of the organisation. Many organisations just ignored the fact that anything that comes out of the lab will be dead within seconds as it leaves the „free like a bird environment“ and enters the „caged bird“ environment that we are used to in large corporates. Many IT organisations feel threatened by the labs and have a low motivation to help them bringing successful prototypes into production. Regulations such as GDPR are seen as helpful allies to reject the work of labs as unrealistic and in-compliant.

The Data Factory can build the bridge between the two worlds

In one way or another we need something to create the bridge between the two environments to successfully deliver data analytics & AI products and in my opinion this bridge is the Data Factory. The Data Factory needs to replace or extend the Data Lab to ensure that new innovative projects can be executed and then better scaled into the rest of the organisation. There are at least five key components of such a Data Factory, possibly even more.

(1) The Data Platform ensures that technologies are available outside the capsule. It ensures a common state of the art data analytics & AI tools for sandbox, development & production stages, which means that an idea for an algorithm can be explored and then later operated on the same technological environment. Standardised programming languages (e.g. Python), tools and packages are used throughout all phases of the project. Security, data protection and compliance is ensured on platform so the algorithm developer does not need to worry so much when the algorithm leaves the lab. It provides a central data storage for productive data as part of a joint data lake and data catalog and standardised accesses and interfaces to legacy IT systems.

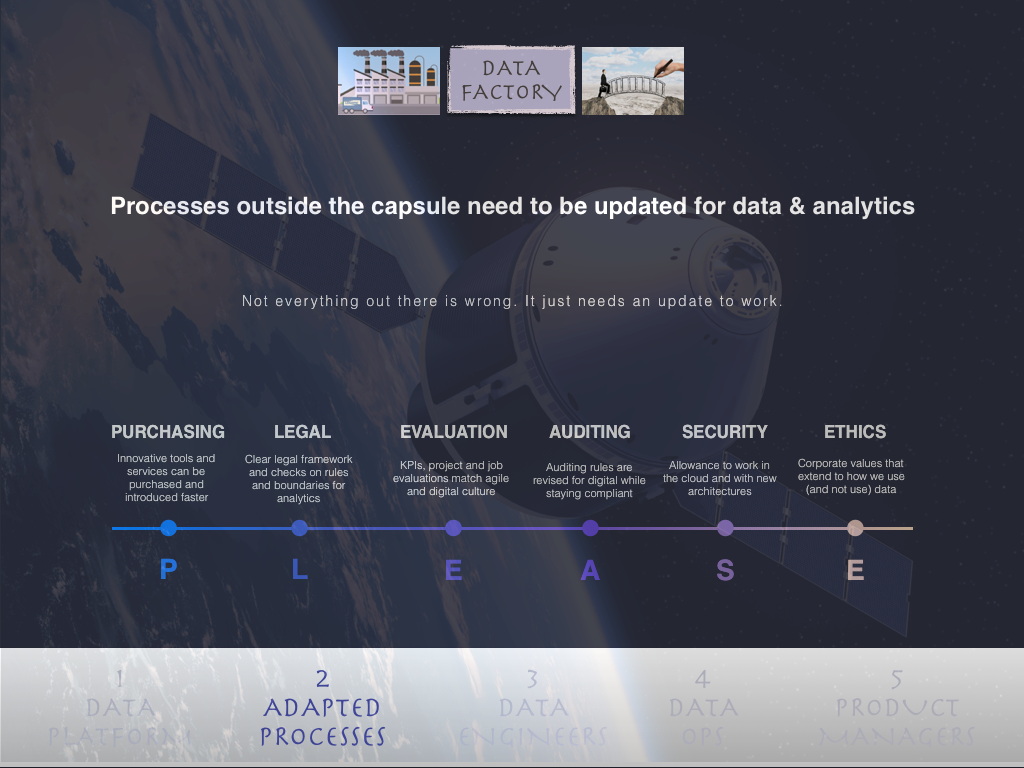

(2) Processes outside the capsule need to be updated to ensure they can handle data analytics & AI projects. They include at least the PLEASE processes: Purchasing, Legal, Evaluation, Auditing, Security and Ethics.

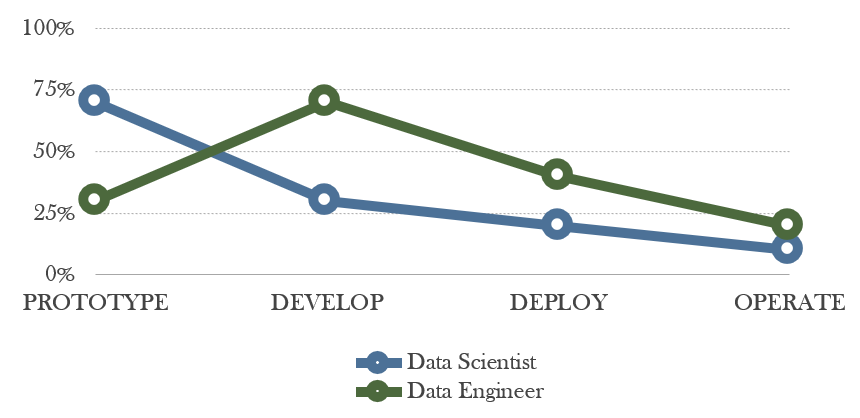

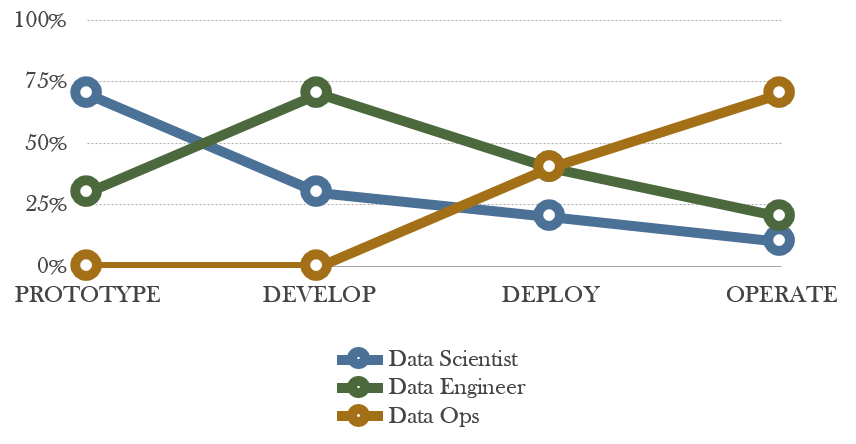

(3) Data Engineers are usually more important than data scientists after prototyping, but many companies have hired a lot of data scientists and not enough data engineers. They ignored that once the analytical model is designed, it is mostly about software engineering! Data engineers have the focus on software engineering rather than modelling. Software and architecture skills are key for developing ETL processes, integrating data ad-hoc and cleansing, writing APIs and database connections, creating CI/CD pipeline and DevOps, testing and deploying data products and adding new components to the platform.

(4) Data Ops are needed to support the operation and maintenance of finished data analytics & AI products. Data Ops contains tasks that are perceived as unattractive for data scientists, e.g.:

• Rules for deployment

• Helpdesk and Ticketing

• ITIL processes

• Managing.SLAs

• Logging

• Archiving

Furthermore, IT Operations and Data Scientists often speak different languages. Data Scientists feel misunderstood because things are complicated and slow. IT Operations are irritated by the perceived ignorance towards corporate processes of the data scientists.

(5) And here comes the Data Product Manager into the game! The Data Product Manager is always 100% involved from start to end of the data analytics & AI product. The Data Product Manager is a true multi-talent in data science and management. He or she holds the end to end responsible for the Data Analytics and AI product, which includes:

• Ideation and data product definition

• Product owner in scrum approach

• Managing all stakeholder relations

• Accountable for deployment

• Ensuring SLAs during operation

• First contact for change requests

• Change management

The Data Product Manager understands the Data Scientists and Data Engineers, but also speaks the language of more traditional business functions. He is the key person to bring change within your organisation and help to create the cultural bridge between the two universes within your corporation.

Obviously there are more further important elements that should be considered as part of every successful Data Factory that I did not mention here. Nevertheless, the Data Factory concept presented in this article should make you rethink how you organise Data Science, AI and Business Intelligence across your enterprise. Maybe a key learning is that Data Science, AI and Business Intelligence are closer than you think and should come as close together as possible!

Dr. Alexander Borek is a data analytics executive, evangelist and thought leader. In his current role, he serves as the Global Head of Data & Analytics at Volkswagen Financial Services, which provides the financial products for the Automotive Brands of the Volkswagen Group. He runs an international operative unit consisting of data scientists, data engineers, product managers and UX experts to develop and operate high-end data analytics products. Before, he led the data analytics transformation at Volkswagen Group and worked as a consultant at Gartner and IBM, where he had advised Global Fortune 500 executives in multiple industries. Dr. Borek is the author of the books “Total Information Risk Management” and “Marketing with Smart Machines“. He holds a PhD from the Institute for Manufacturing at the University of Cambridge.

Copyright Dr. Alexander Borek, Global Head of Data & Analytics, Volkswagen Financial Services