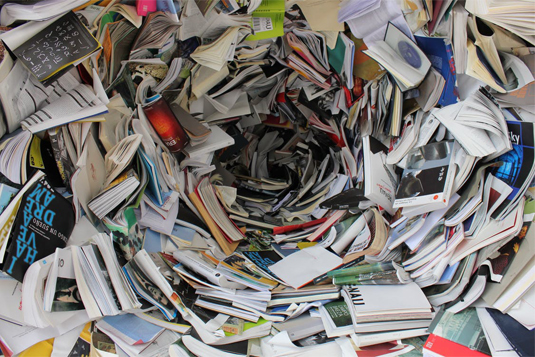

This week I gave a TED style presentation on the History of Analytics to a bunch of our Digital Solution Architects… 20 minutes, a whiteboard, and the truth, what’s not to love for a story telling consultant. Analytics has been on a steady move from the hinterlands to the center of the stage and is set to become even more prominent; the lead actor. Information and its intelligent use have often driven revolutions in society, I’ve written about how Gutenberg’s printing press powered the last Big Data revolution; being credited for the renaissance and enlightenment.

Bernard Panes, Analytics Solution Architect, Accenture, bernard.r.panes@accenture.com

Bernard Panes, Analytics Solution Architect, Accenture, bernard.r.panes@accenture.com

Bernard will be speaking at the Enterprise Data and Business Intelligence & Analytics Conference Europe, 20-23 November 2017, on the topic In a Chaotic World, How Do You Solve a Problem Like Analytics?

This article was previously published here.

Florence Nightingale’s Rose visualization gives an early example Visual Analytics changing the direction of medicine and is a metaphor for the data enabled scientific revolution, industrial age, and move into the information age. So, how did we get here? And what is next?

1960s: Decision Support Systems

The ‘60s were the second era of computing in which Personal Computers (micro-processors) came into being. Taking advantage of this DSSs basically meant using a computer to help with decision making. It was of course limited by processing power and the data that we could get hold of. DSS was used for a handful of niche use cases.

1980s: Management Information Systems

The continued rise of digitization and use of computer systems resulted in more and more of an organisation’s core operations being computerized. This greatly increased the scope for managers in particular to gather information so that they could work out what was going on and back up their intuition about who to fire with some quantitative metrics… MI Reports were often an afterthought of building the business’s operational systems.

1990s: Business Intelligence

MI reporting stated to be branded as BI in the 90s and with it the term ‘Data Warehouse’ became mainstream. An ever more complex landscape of operational systems made gathering data to create a useful ‘report’ very challenging. A Data warehouse seemed like a neat solution; gather all of the data and store it in one place in a very neat and tidy way with all of the definitions aligned, quality assured, data connected, and a full history.

These often didn’t work because it’s really difficult to get an entire company to agree on what means what and then plumb in data from every part of a complex and moving system landscape… Often these Data Warehouses became white elephants; they took years to build, still weren’t complete, and nobody trusted the reports anyway.

2000s: Self Service BI & Proliferation of Grey IT

Because Data Warehouses were such a pain and the internet gave everyone a bit of computer savvy and enhanced expectations departmental heads started to bypass the corporate IT constraints of the centralized approach. They started building their own local BI solutions, either in MS Excel (Most popular BI tool of all time) and later on some great data visualization tools from the likes of Qlik or Tableau.

This was wonderful for the department, and also great for IT strategy consultants… who could make loads of money telling big companies that they needed an enterprise strategy to integrate all of their information systems to eliminate the waste from grey IT and waste it on an even bigger IT project instead.

2010s : Big Data

If the 2000s were the childhood of the internet the 2010s were really where things really got going with mobile technology in the mix. Every strategy presentation had a logo of Uber or Facebook on it and business leaders all over the place were being read a horror story called ‘Innovate or die’.

In data terms all this meant was an explosion of data volume, variety, and velocity. Big Data was the fashion but only the California based big tech companies knew how to do it because they made the technology.

Clearly traditional data warehouse technologies were getting out of their depth now and luckily a new set of technologies came into being. Hadoop (big and cheap) and NoSql / In-memory Databases (Fast) came into play to complement the DW. Data Lakes became the buzz word for a new type of approach (“chuck in the data and work out how to use it later”) and new techniques emerged for visualizing data…

We were however still in a paradigm that needed a person to look at data and work out what to do, even though we had moved from Descriptive analytics, telling someone what had happened, to Predictive analytics… telling someone what might happen.

Now: Advanced Analytics

Machine learning is becoming widespread, acknowledging that some of this big data is just too big for a human brain to make sense of by visualization alone and we’re going to need some complex statistics to work out what is going on. Prescriptive analytics became the new approach – “I’ll tell you what happened, what will happen, and what you should do now to make an even better thing happen”.

All of those same information challenges that came with the Data Warehouse still exist, and so do Data Warehouses for certain high quality aggregated reporting needs. However, the new data is from everywhere, the company doesn’t control it or even own it.

Thanks to ‘Data Democratization’ the user has changed too (thank you Mobile and IoT!). If you think the notion of ‘management’ information is elitist then we’re in a good place. The insight can now be shared with all of the employees, the customers, the suppliers, machines, the citizens, the robots, and in fact anyone or anything.

Next?

Well some technology is going to come and disrupt things even more… Blockchain as a high trust distributed database, immersive technologies as a new channel to consume data, and (general) artificial intelligence at the core of new approaches to making sense out of data. All of these place analytics and the general discipline of getting data and making sense of it at the center of nearly everything that humans will do in the next decade. A long way from a mere decision support system.. or is it?

Perhaps the key change is the word ‘Prescriptive’. If our information system can tell a person what they should do… then how long until the person becomes entirely unnecessary … If the system knows what needs to be done, just tell the robot to do it and let me go surfing!

Obviously, this article is deliberately playful and does not represent the views of Accenture, whom I work for, I’m not even sure they represent my own views.

Bernard Panes is an Innovator within Accenture’s Digital practice. As a Solution Architect in Analytics he shapes large complex transformation programs for clients in many domains. Despite his expertise, he is sometimes sceptical about how anyone can assure or plan delivery in an ever more chaotic digital world. Follow Bernard @bernardpanes

Copyright Bernard Panes